Summer 2020 should have been the watershed season for both HDR and UHD TV production. In Europe, the UEFA Euro 2020 soccer championship was due to be followed by the Summer Olympics in Tokyo. Both events were set to make use of new technologies and provide the impetus for future live sport TV OB coverage. The COVID-19 pandemic put paid to practically all large-scale sport, forcing broadcasters and production companies to adjust their technology and focus on new remote workflows.

During the transition from SD to HD in the early 2000s, the skeptics had a field day bemoaning the new technology when images were viewed in “non-optimal” circumstances. This is even more applicable today with UHD TV. Many people have already seen UHD TV, and to the untrained eye, they don’t see any difference from HD.

If you’re going to do UHD, you’ve got to do it well at every step of the production. Resolution alone will not deliver the “wow-factor” to a production; you have to include wide color gamut (WCG), high dynamic range (HDR) and immersive audio, along with enough bandwidth into the home. Cut any of those corners and the skeptics will complain that they don’t see the point.

“We had to come up with a way to measure the conversions, and decide how we were going to be able to merge these two production silos of HDR and SDR.”

— Chris Seeger

High Dynamic Range is a video and image technology that improves the way light is represented. It overcomes the limits of our current regular Standard Dynamic Range. HDR offers the possibility to represent substantially brighter highlights, darker shadows, more detail and more colorful colors than was previously possible.

HDR does not increase the capability of a display; rather it allows better use of displays that have high brightness, contrast and color capabilities. Not all HDR displays have the same capabilities and different HDR content will thus look different depending on the display used. HDR emerged first for video in 2014. HDR10, HDR10+, PQ10, Dolby Vision and HLG (Hybrid Log Gamma) are common HDR formats.

So how much have we learned to date about implementing HDR in live TV production, and does that imply a requirement to produce a separate workflow in the OB truck? Can HDR and SDR sit side by side in live production? And what new production functions are thereby created in order to monitor and maximize the benefits of HDR through the production chain, all the way to the consumer’s HDR-enabled TV set or device?

“The Future of Live HDR Production,” a virtual webinar assembled and led (appropriately) by Leader America and moderated by Sports Video Group, featured Prinyar Boon, Product Manager, SMPTE Fellow, Phabrix; Michael Drazin, Director Production Engineering and Technology, NBC Olympics; Pablo Garcia Soriano, Color Supervisor, Managing Director, Cromorama; Ken Kerschbaumer, Editorial Director, Sports Video Group; Kevin Salvidge, European Regional Development Manager, Leader; and Chris Seeger, Office of the CTO, Director, Advanced Content Production Technology, NBC Universal.

Introducing the session Kevin Salvidge said, “We see HDR significantly enhancing the consumer experience, especially when it comes to live events. As with all technology innovation, managing the transition brings its own set of unique challenges. This year, we will be involved in delivering a number of major live sporting events in both HDR and SDR — so let’s have our panel tell us how they are going to do it.”

“We’ve been through a lot of HDR, from testing things at various golf events to the Fox Super Bowl two years ago,” said Michael Drazin. “Being able to do that Super Bowl-level production, with every bell and whistle being thrown at it — as well as the integration with all the various international participants in that compound sharing content — it just showed that we’re there, we can execute. There aren’t major barriers to being able to do even the biggest shows on the planet.”

“Yes we’ve been testing at all kinds of events going back to 2016, and during that time we’ve made every mistake in the book,” Chris Seeger agreed. “We finally realized we really had to come up with objective metrics. We had to come up with a way to measure the conversions, and decide how we were going to be able to merge these two production silos of HDR and SDR. With our partners we’ve been able to reconcile all these different pieces into multiple deliverables. And so we’ve finally gotten it into a decent state at this point in 2021.”

Learning How To Deliver a Single HDR-SDR Production for BT Sport

To provide some background into the evolution of live HDR production, Prinyar Saungsomboon from Phabrix looked back to a single HDR SDR production workflow for live soccer and rugby for BT Sport in 2019, at the launch of that broadcaster’s “Ultimate” HDR channel with Dolby Atmos.

The requirements then were to deliver an HDR experience that is differentiated from SDR; a “single truck” HDR-centric production workflow as there would not be room to add an OB truck; negligible commercial (opex) or logistical impact; HD SDR deliverables such as graphics, team colors and flags must not be compromised; and it had to be existing 10-bit 4:2:2 UHD infrastructure.

The choice of transmission format was driven by the capability of the receiving devices, which for BT Sport was largely app-based on mobile phones, Samsung TVs and PlayStations. For distribution they chose PQ (or HDR10). The choice of live production format was HLG — as that was the only common denominator for all camera systems — and the choice of contribution feed was S-Log3, which was compatible with the Sony cameras used by the host broadcast provider at the time.

“We learned that the overall schedule for a typical OB, like a Premier League match, cannot be extended; it is not commercially viable. The schedule is already very complicated, so it can only be ‘tweaked’ for HDR,” said Saungsomboon.

“Our philosophy is we need to keep this thing simple and repeatable. Content created in SDR needs to look the same way in HDR.”

— Michael Drazin

“For a typical Premier League match there are a lot of deliverables: BT Sport program feed in 2160p50 HDR and 1080i25 SDR; world feed in 2160p50 SDR and 1080i25 SDR; English Premier League ISO feeds are 1080i25 SDR; third-party feeds (such as stadium Jumbotron and medical); VAR [Video Assistant Referee]; and BTS onsite studio productions included with in-vision monitors. So we’ve been through the whole gamut of what you have to do to bring a full system together.

“We could not have done this before 2019,” he said. “It was only then that key enabling technology such as HDR television sets, smart phones and tablets, along with HDR-enabled stabilized camera rigs (Steadicams) and associated 10-bit RF links providing pitch-side presentation, team line-ups and other roving shots became available. We also then had HDR-enabled shoulder and underarm mount cameras and associated 10-bit RF links providing roving coverage such as team arrivals, tunnel shots and pitch-side match coverage.

“We have replay servers with 10-bit compression codecs and high density channel count, along with HDR super slow-motion and hyper slow-motion cameras covering slo-mo HDR replays. There is also a new generation of HDR conversion equipment and HDR-capable graphics generators and processors,” said Saungsomboon.

BT Sport produced its own UHD HDR feed for the 2019 UEFA Champions Cup Final in Madrid in June and then launched its BT Sport Ultimate channel at the Community Shield at Wembley in August with 4K, HDR, 50 frames per second and Dolby Atmos sound. This enabled 4K broadcasts on 4K TVs, Xbox One S and X consoles, Google’s Chromecast Ultra streamer and the Apple TV 4K. Samsung 4K smart TVs (2018 models onwards), the Google Chromecast Ultra and Xbox One S and X also supported HDR (High Dynamic Range).

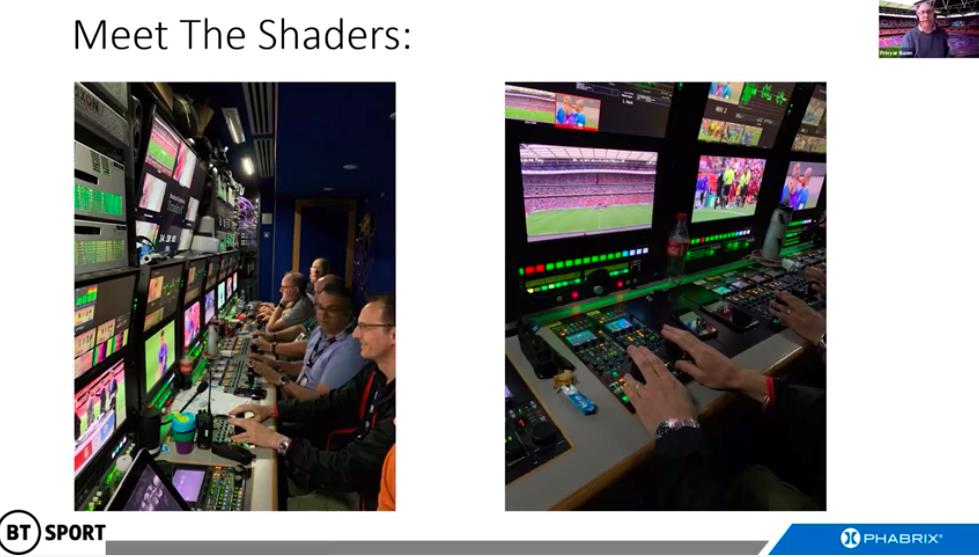

“You can’t flip a whole OB truck to HDR; it has got to remain useful and flexible,” said Saungsomboon. For replay codecs and RF cameras you have to move to 10-bit, so there’s a hit there. A new HDR Vision Supervisor position had to be put in place, along with an HDR FACs Check procedure. And you have a whole team of Shaders monitoring the multicamera production, all overseen by the HDR Vision Supervisor.

“What we did, in order to meet all the requirements, was to develop a technique called Closed Loop Shading, where Shaders continued to work in SDR. They just carry on doing their job. But we basically have an HDR production from which we derive SDR — we don’t have a parallel production process or an add-on from the output of the cameras.

“So it’s a closed loop. Why do this? Because what you see is what you get: nothing else changes. The SDR is critical, and you have to manage that constantly — especially as the weather can vary wildly over the course of a 90-minute game, from bright sunshine to cloud and rain and even snow,” said Saungsomboon.

“This technique works for any HDR format. It works for every camera system, and SDR cameras are mapped to HDR and then also pass through the same down mapper — inheriting the Tone Mapper ‘look.’ Vision Guarantees and Shaders can continue to control the ‘look’ of all the program cameras in all lighting conditions, and guarantee the production. I implore anyone who is doing this to use this technique as the foundation and then build on that output,” he said.

NBC Olympics and Universal Urge Collaboration for LookUp Tables

“It’s been quite an evolution from where we were to where we are today and where we’re going,” said Drazin. “Our philosophy is we need to keep this thing simple and repeatable. Content created in SDR needs to look the same way in HDR.

“As you transition from a 709 boundary to a 2020 boundary, everything that exists in 709 should still be represented the same way in HDR and then back to SDR the same way. That is one of the core principles of our philosophy. And the other one is that we did a lot of trials with our Shaders in the shading environment, looking at the CCUs and what we got out of HDR and SDR sides of the CCUs.

“But maybe the biggest thing in terms of what it took to get from where we were to where we’re going is really a collaboration throughout the industry — including with our colleagues at the BBC — with whom we’ve created a set of LUTs we’re now sharing with the industry as a whole. Without this set of LUTs we wouldn’t be where we are.”

A statement released by Chris Seeger and Michael Drazin, titled “NBCU LUTs and Single Stream Recommendation,” notes that ‘NBCU, in collaboration with Cromorama, and building on ITU working group discussions for HDR operational practices involving Dolby, BBC and Philips, has developed techniques to enable “single-stream” production that feeds both UHD HDR and SDR transmission simultaneously.

‘The NBCU LUTs developed for this workflow, the statement continues, enable single-stream production whereby the HDR and SDR products are consistent to the point where the benefits of HDR are realized, making a unified production possible. Subsequently we’re sharing these efforts with the broadcast community for continued collaboration and use in production in distribution.

“The NBCU LUTs include both HLG and PQ LUTs following similar HDR/SDR conversion methodology and color science. NBC has a commitment to industry collaboration and would like to encourage consistent media exchange, therefore we are willing to provide the NBCU LUTs freely. The NBCU LUTs are provided on an ‘as is’ basis with no warranties,’” according to the statement.

“The whole goal,” said Drazin, “Is to get everybody to work together the way we do today. Nobody is concerned about sharing SDR content from entity to entity, and we need to get there in HDR — and by making it totally publicly available we feel it’s a first step to get there, and if people have something better to offer we hope they would do so to continue the collaboration.

“The focus of the entire conversion effort,” continued Drazin, “is to maintain the original artistic intent such that the SDR derived from the NBCU LUT compared side-by-side is consistent with the HDR until the point where the advantages of HDR are realized — even with a reduction in dynamic range in the converted SDR.”